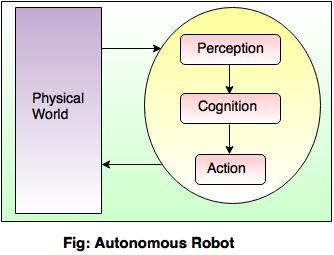

Perception is a process to interpret, acquire, select and then organize the sensory information that is captured from the real world.

What is perception in AI?

What is perception in AI?

Perception is a process to interpret, acquire, select and then organize the sensory information that is captured from the real world.

For example: Human beings have sensory receptors such as touch, taste, smell, sight and hearing. So, the information received from these receptors is transmitted to human brain to organize the received information.

According to the received information, action is taken by interacting with the environment to manipulate and navigate the objects.

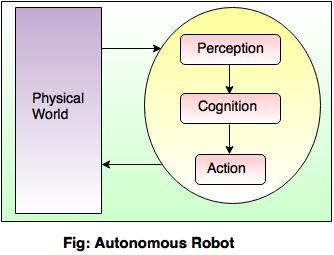

Perception and action are very important concepts in the field of Robotics. The following figures show the complete autonomous robot.

There is one important difference between the artificial intelligence program and robot. The AI program performs in a computer stimulated environment, while the robot performs in the physical world.

For example:

In chess, an AI program can be able to make a move by searching different nodes and has no facility to touch or sense the physical world.

However, the chess playing robot can make a move and grasp the pieces by interacting with the physical world.

Image formation in digital camera

Image formation in digital camera

Image formation is a physical process that captures object in the scene through lens and creates a 2-D image.

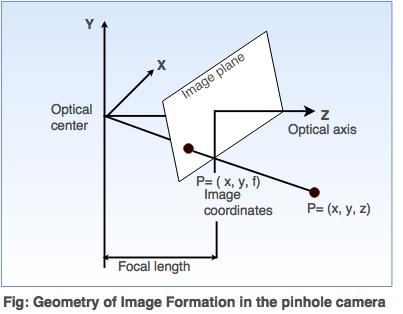

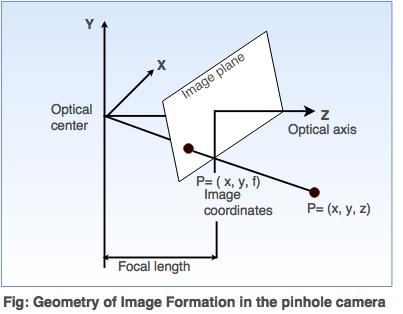

Let's understand the geometry of a pinhole camera shown in the following diagram.

In the above figure, an optical axis is perpendicular to the image plane and image plane is generally placed in front of the optical center.

So, let P be the point in the scene with coordinates (X,Y,Z)

and P' be its image plane with coordinates (x, y, z).

If the focal length from the optical center is f, then by using properties of similar triangles, equation is derived as,

-x/f = X/Z so x = - fX/Z ..........................equation (i)

-y/f = -Y/Z so y = - fY/Z .........................equation (ii)

These equations define an image formation process called as perspective projection.

What is the purpose of edge detection?

Edge detection operation is used in an image processing.

The main goal of edge detection is to construct the ideal outline of an image.

Discontinuity in brightness of image is affected due to:

i) Depth discontinuities

ii) Surface orientation discontinuities

iii) Reflectance discontinuities

iv) Illumination.

3D-Information extraction using vision

Why extraction of 3-D information is necessary?

The 3-D information extraction process plays an important role to perform the tasks like manipulation, navigation and recognition. It deals with the following aspects:

1. Segmentation of the scene

The segmentation is used to arrange the array of image pixels into regions. This helps to match semantically meaningful entities in the scene.

homogeneous.

The union of the neighboring regions should not be homogeneous.

Thresholding is the simplest technique of segmentation. It is simply performed on the object, which has an homogeneous intensity and a background with a different intensity level and the pixels are partitioned depending on their intensity values.

2. To determine the position and orientation of each object

Determination of the position and orientation of each object relative to the observer is important for manipulation and navigation tasks.

For example: Suppose a person goes to a store to buy something. While moving around he must know the locations and obstacles, so that he can make the plan and path to avoid them.

The whole orientation of image should be specified in terms of a three dimensional rotation.

3. To determine the shape of each and every object

When the camera moves around an object, the distance and orientation of that object will change but it is important to preserve the shape of that object.

For example: If an object is cube, that fact does not change, but it is difficult to represent the global shape to deal with wide variety of objects present in the real world.

If the shape of an object is same for some manipulating tasks, it becomes easy to decide how to grasp that object from a particular place.

The object recognition plays most significant role to identify and classify the objects as an example only when the geometric shapes are provided with color and texture.

However, a question arises that, how should we recover 3-D image from the pinhole camera?

There are number of techniques available in the visual stimulus for 3D-image extraction such as motion, binocular stereopsis, texture, shading, and contour. Each of these techniques operates on the background assumptions about physical scene to provide interpretation.

What is planning in AI?

The planning in Artificial Intelligence is about the decision making tasks performed by the robots or computer programs to achieve a specific goal.

The execution of planning is about choosing a sequence of actions with a high likelihood to complete the specific task.

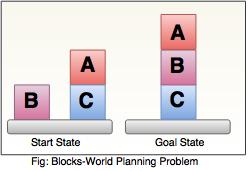

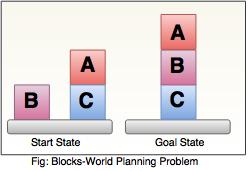

The blocks-world problem is known as Sussman Anomaly.

Noninterleaved planners of the early 1970s were unable to solve this problem, hence it is considered as anomalous.

When two subgoals G1 and G2 are given, a noninterleaved planner produces either a plan for G1 concatenated with a plan for G2, or vice-versa.

In blocks-world problem, three blocks labeled as 'A', 'B', 'C' are allowed to rest on the flat surface. The given condition is that only one block can be moved at a time to achieve the goal.

The start state and goal state are shown in the following diagram.

Components of Planning System

The planning consists of following important steps:

Choose the best rule for applying the next rule based on the best available heuristics.

Apply the chosen rule for computing the new problem state.

Detect when a solution has been found.

Detect dead ends so that they can be abandoned and the system’s effort is directed in more fruitful directions.

Detect when an almost correct solution has been found.

Goal stack planning

This is one of the most important planning algorithms, which is specifically used by STRIPS.

The stack is used in an algorithm to hold the action and satisfy the goal. A knowledge base is used to hold the current state, actions.

Goal stack is similar to a node in a search tree, where the branches are created if there is a choice of an action.

The important steps of the algorithm are as stated below:

i. Start by pushing the original goal on the stack. Repeat this until the stack becomes empty. If stack top is a compound goal, then push its unsatisfied subgoals on the stack.

ii. If stack top is a single unsatisfied goal then, replace it by an action and push the action’s precondition on the stack to satisfy the condition.

iii. If stack top is an action, pop it from the stack, execute it and change the knowledge base by the effects of the action.

iv. If stack top is a satisfied goal, pop it from the stack.

Non-linear planning

This planning is used to set a goal stack and is included in the search space of all possible subgoal orderings. It handles the goal interactions by interleaving method.

Advantage of non-Linear planning

Non-linear planning may be an optimal solution with respect to plan length (depending on search strategy used).

Disadvantages of Nonlinear planning

It takes larger search space, since all possible goal orderings are taken into consideration.

Complex algorithm to understand.

Algorithm

1. Choose a goal 'g' from the goalset

2. If 'g' does not match the state, then

Choose an operator 'o' whose add-list matches goal g

Push 'o' on the opstack

Add the preconditions of 'o' to the goalset

3. While all preconditions of operator on top of opstack are met in state

Pop operator o from top of opstack

state = apply(o, state)

plan = [plan; o]

What is learning?

According to Herbert Simon, learning denotes changes in a system that enable a system to do the same task more efficiently the next time.

Arthur Samuel stated that, "Machine learning is the subfield of computer science, that gives computers the ability to learn without being explicitly programmed ".

In 1997, Mitchell proposed that, " A computer program is said to learn from experience 'E' with respect to some class of tasks 'T' and performance measure 'P', if its performance at tasks in 'T', as measured by 'P', improves with experience E ".

The main purpose of machine learning is to study and design the algorithms that can be used to produce the predicates from the given dataset. /

Besides these, the machine learning includes the agents percepts for acting as well as to improve their future performance.

The following tasks must be learned by an agent.

To predict or decide the result state for an action.

To know the values for each state(understand which state has high or low vale).

To keep record of relevant percepts.

Why do we require machine learning?

Machine learning plays an important role in improving and understanding the efficiency of human learning.

Machine learning is used to discover a new things not known to many human beings.

Various forms of learnings are explained below:

1. Rote learning

Rote learning is possible on the basis of memorization.

This technique mainly focuses on memorization by avoiding the inner complexities. So, it becomes possible for the learner to recall the stored knowledge.

For example: When a learner learns a poem or song by reciting or repeating it, without knowing the actual meaning of the poem or song.

2. Induction learning (Learning by example).

Induction learning is carried out on the basis of supervised learning.

In this learning process, a general rule is induced by the system from a set of observed instance.

However, class definitions can be constructed with the help of a classification method.

For Example:

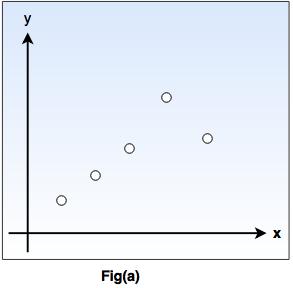

Consider that 'ƒ' is the target function and example is a pair (x ƒ(x)), where 'x' is input and ƒ(x) is the output function applied to 'x'.

Given problem: Find hypothesis h such as h ≈ ƒ

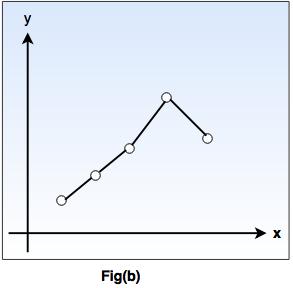

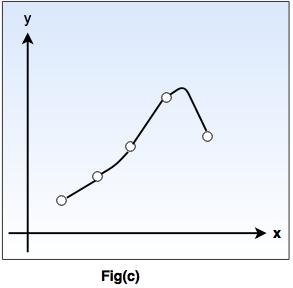

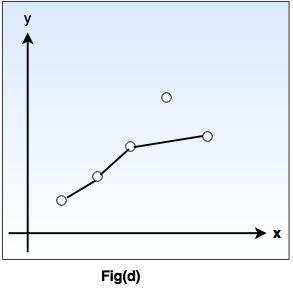

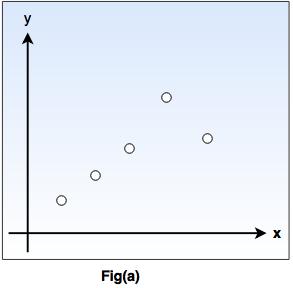

So, in the following fig-a, points (x,y) are given in plane so that y = ƒ(x), and the task is to find a function h(x) that fits the point well.

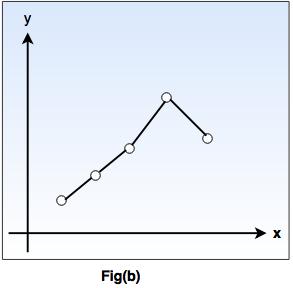

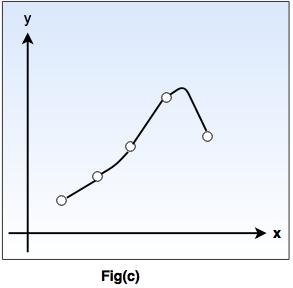

In fig-b, a piecewise-linear 'h' function is given, while the fig-c shows more complicated 'h' function.

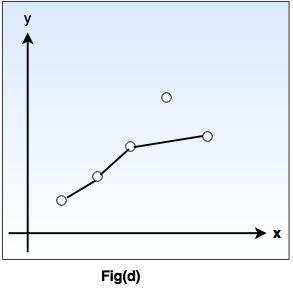

As shown in fig.(d), we have a function that apparently ignores one of the example points, but fits others with a simple function. The true/ is unknown, so there are many choices for h, but without further knowledge, we have no way to prefer (b), (c), or (d).

3. Learning by taking advice

This type is the easiest and simple way of learning.

In this type of learning, a programmer writes a program to give some instructions to perform a task to the computer. Once it is learned (i.e. programmed), the system will be able to do new things.

Also, there can be several sources for taking advice such as humans(experts), internet etc.

However, this type of learning has a more necessity of inference than rote learning.

As the stored knowledge in knowledge base gets transformed into an operational form, the reliability of the knowledge source is always taken into consideration.

Explanation based learning

Explanation-based learning (EBL) deals with an idea of single-example learning.

This type of learning usually requires a substantial number of training instances but there are two difficulties in this:

I. it is difficult to have such a number of training instances

ii. Sometimes, it may help us to learn certain things effectively, specially when we have enough knowledge.

Hence, it is clear that instance-based learning is more data-intensive, data-driven while EBL is more knowledge-intensive, knowledge-driven.

Initially, an EBL system accepts a training example.

On the basis of the given goal concept, an operationality criteria and domain theory, it "generalizes" the training example to describe the goal concept and to satisfy the operationality criteria (which are usually a set of rules that describe relationships between objects and actions in a domain).

Thus, several applications are possible for the knowledge acquisition and engineering aspects.

Learning in Problem Solving

Humans have a tendency to learn by solving various real world problems.

The forms or representation, or the exact entity, problem solving principle is based on reinforcement learning.

Therefore, repeating certain action results in desirable outcome while the action is avoided if it results into undesirable outcomes.

As the outcomes have to be evaluated, this type of learning also involves the definition of a utility function. This function shows how much is a particular outcome worth?

There are several research issues which include the identification of the learning rate, time and algorithm complexity, convergence, representation (frame and qualification problems), handling of uncertainty (ramification problem), adaptivity and "unlearning" etc.

In reinforcement learning, the system (and thus the developer) know the desirable outcomes but does not know which actions result into desirable outcomes.

In such a problem or domain, the effects of performing the actions are usually compounded with side-effects. Thus, it becomes impossible to specify the actions to be performed in accordance to the given parameters.

Q-Learning is the most widely used reinforcement learning algorithm.

The main part of an algorithm is a simple value iteration update. For each state 'S', from the state set S, and for each action, a, from the action set 'A', it is possible to calculate an update to its expected reduction reward value, with the following expression:

Q(st, at) ← Q(st, at) + αt (st, at) [rt + γmaxaQ (st+1, a) - Q(st, at)]

where rt is a real reward at time t, αt(s,a) are the learning rates such that 0 ≤ αt(s,a) ≤ 1, and γ is the discount factor such that 0 ≤ γ < 1.

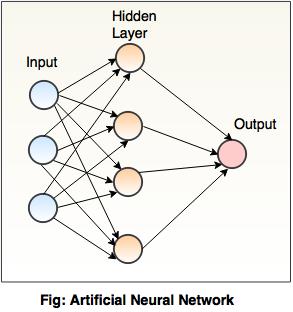

What is neural network?

According to Dr. Robert Hecht-Nielsen, a neural network is defined as, "A computing system made up of a number of simple, highly interconnected processing elements, which process information by their dynamic state response to external inputs".

A neural network has the capability of representing the human brain artificially in such a way that it performs the stimulation of its learning process.

Human brain comprises billions of nerve cells called neurons. These neurons are connected with other cells called as axons.

In neural network, the stimulation process is carried out with the help of dendrites.

These dendrites are connected to sensory organs. Thus, the inputs are accepted from external environment by the sensory organs and are passed to dendrites. These inputs create electric impulses and travel through the neural network.

A neural network consists of a number of nodes, which are connected by links. Each link is associated with a numeric weight.

Hence, the learning takes place by updating the weights.

The units which are connected to the external environment can be used as input or output units.

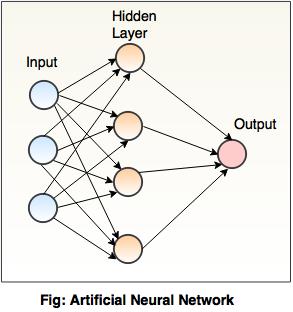

The simple model shown in the diagram.

In a simple model, the first layer is the input layer, followed by one hidden layer, and lastly by an output layer. Each layer can contain one or more neurons.

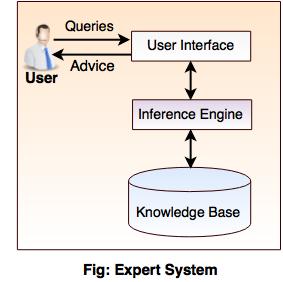

Expert System

What is expert system?

The expert system is a computer program which is developed by using AI technologies to solve the complex problems in a particular field.

The computer program consists of expert level knowledge to respond properly.

The expert system should be reliable, highly responsive and understandable.

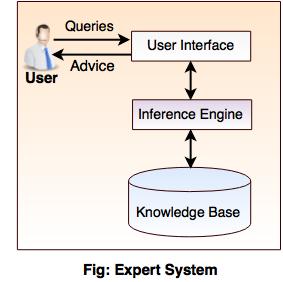

Components of Expert System

The basic components of an expert system are given below:

1. User interface

It is a software which provides communication access between user and the system.

For example: If an user asks questions, then the system responds with an answer.

2. Knowledge base

Knowledge base contains expert level knowledge of a particular field that is stored in knowledge representational form.

3. Inference engine

The Inference engine is a software used to perform the inference reasoning tasks. It uses the knowledge which is stored in the knowledge base and then the information is provided by the user to conclude a new knowledge.

What is shell?

A shell is a specially designed tool on the basis of the requirements of particular application.

Thus, the user provides the knowledge base to the shell.

For Example:

Shell manages the input and output operations

Shell processes the information which is provided by the user,and then compares with the concept stored in the knowledge base, and provides the solution for a particular problem.

Benefits of Expert System

Some important benefits of an expert system are listed below:

1. Knowledge Sharing.

2. Reduces errors and inconsistency

3. Allows non expert users to reach scientifically proven conclusions.

Prolog in AI

Prolog stands for Programming in logic. It is used in artificial intelligence programming.

Prolog is a declarative programming language.

For example: While implementing the solution for a given problem, instead of specifying the ways to achieve a certain goal in a specific situation, user needs to specify about the situation (rules and facts) and the goal (query). After these stages, Prolog interpreter derives the solution.

Prolog is useful in AI, NLP, databases but useless in other areas such as graphics or numerical algorithms.

Prolog facts

A fact is something that seems to be true.

For example: It's raining.

In Prolog, facts are used to form the statements. Facts consist of a specific item or relation between two or more items.

To learn more go to the main

Book article!

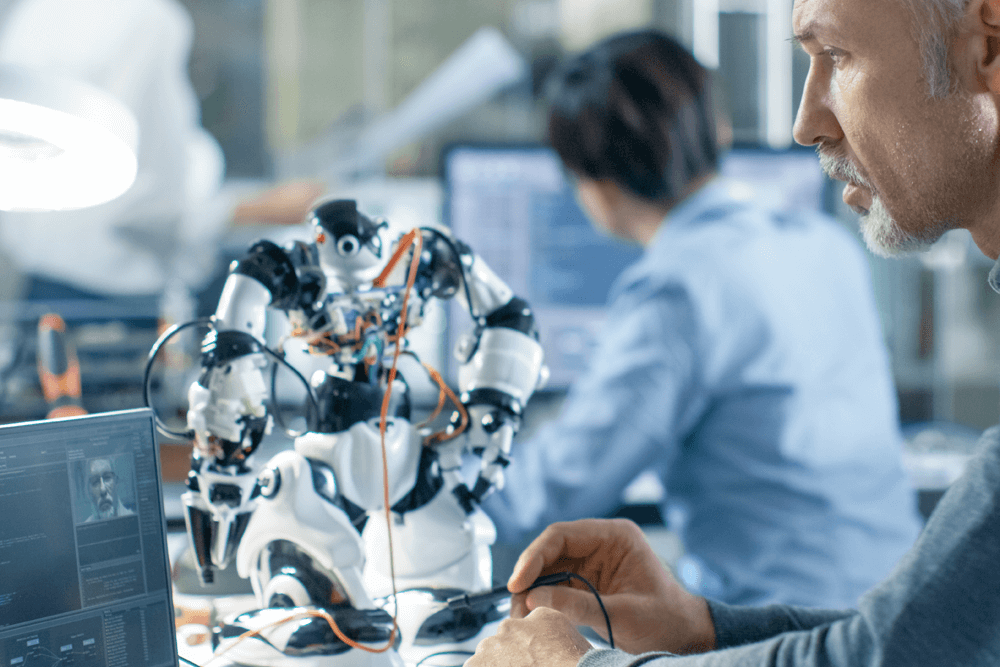

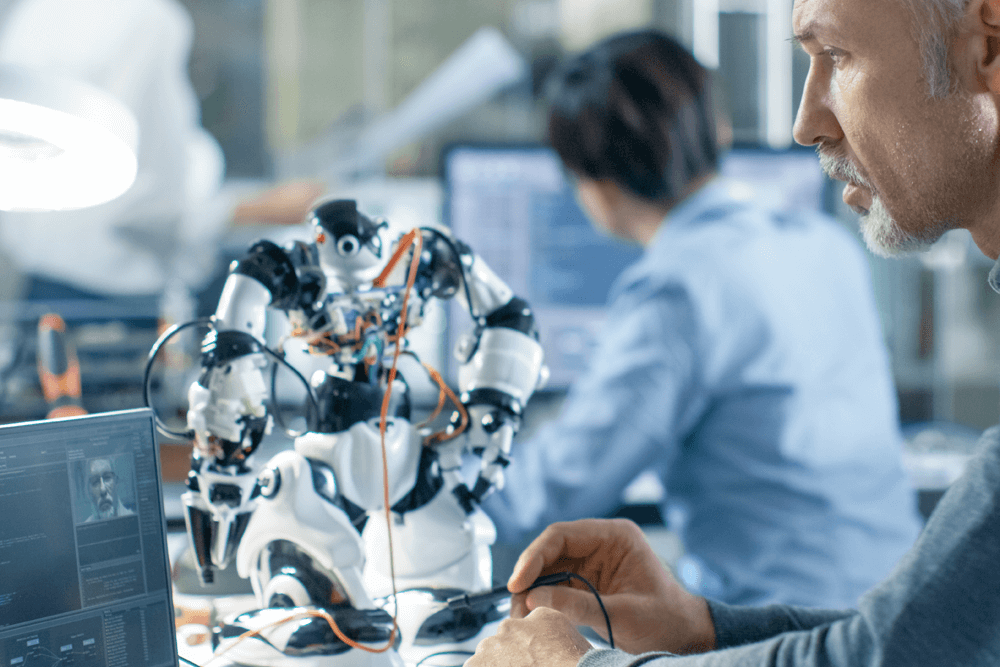

Robotics

While there continues to be confusion about the terms artificial intelligence (AI) and robotics, they are two separate fields of technology and engineering. However, when combined, you get an artificially intelligent robot where AI acts as the brain, and the robotics acts as the body to enable robots to walk, see, speak, smell and more.

Let’s look at the separate fields of artificial intelligence and robotics to illustrate their differences.

What is artificial intelligence (AI)?

Artificial intelligence is a branch of computer science that creates machines that are capable of problem-solving and learning similarly to humans. Using some of the most innovative AIs such as machine learning and reinforcement learning, algorithms can learn and modify their actions based on input from their environment without human intervention. Artificial intelligence technology is deployed at some level in almost every industry from the financial world to manufacturing, healthcare to consumer goods and more. Google’s search algorithm and Facebook’s recommendation engine are examples of artificial intelligence that many of us use every day. For more practical examples and more in-depth explanations, cheque out my website section dedicated to AI.

What is robotics?

The branch of engineering/technology focused on constructing and operating robots is called robotics. Robots are programmable machines that can autonomously or semi-autonomously carry out a task. Robots use sensors to interact with the physical world and are capable of movement, but must be programmed to perform a task. Again, for more on robotics cheque out my website section on robotics.

Where do robotics and AI mingle?

One of the reasons the line is blurry and people are confused about the differences between robotics, and artificial intelligence is because there are artificially intelligent robots—robots controlled by artificial intelligence. In combination, AI is the brain and robotics is the body. Let’s use an example to illustrate. A simple robot can be programmed to pick up an object and place it in another location and repeat this task until it’s told to stop. With the addition of a camera and an AI algorithm, the robot can “see” an object, detect what it is and determine from that where it should be placed. This is an example of an artificially intelligent robot.

Artificially intelligent robots are a fairly recent development. As research and development continue, we can expect artificially intelligent robots to start to reflect those humanoid characterizations we see in movies.

Self-aware robots

One of the barriers to robots being able to mimic humans is that robots don’t have proprioception—a sense of awareness of muscles and body parts—a sort of “sixth sense” for humans that is vital to how we coordinate movement. Roboticists have been able to give robots the sense of sight through cameras, sense of smell and taste through chemical sensors and microphones help robots hear, but they have struggled to help robots acquire this “sixth sense” to perceive their body.

Now, using sensory materials and machine-learning algorithms, progress is being made. In one case, randomly placed sensors detect touch and pressure and send data to a machine-learning algorithm that interprets the signals.

In another example, roboticists are trying to develop a robotic arm that is as dexterous as a human arm, and that can grab a variety of objects. Until recent developments, the process involved individually training a robot to perform every task or to have a machine learning algorithm with an enormous dataset of experience to learn from.

Robert Kwiatkowski and Hod Lipson of Columbia University are working on “task-agnostic self-modelling machines.” Similar to an infant in its first year of life, the robot begins with no knowledge of its own body or the physics of motion. As it repeats thousands of movements it takes note of the results and builds a model of them. The machine-learning algorithm is then used to help the robot strategize about future movements based on its prior motion. By doing so, the robot is learning how to interpret its actions.

A team of USC researchers at the USC Viterbi School of Engineering believe they are the first to develop an AI-controlled robotic limb that can recover from falling without being explicitly programmed to do so. This is revolutionary work that shows robots learning by doing.

Artificial intelligence enables modern robotics. Machine learning and AI help robots to see, walk, speak, smell and move in increasingly human-like ways.

What's the Difference Between Robotics and Artificial Intelligence?

Is robotics part of AI? Is AI part of robotics? What is the difference between the two terms? We answer this fundamental question.

Robotics and artificial intelligence (AI) serve very different purposes. However, people often get them mixed up.

A lot of people wonder if robotics is a subset of artificial intelligence. Others wonder if they are the same thing.

Since the first version of this article, which we published back in 2017, the question has gotten even more confusing. The rise in the use of the word "robot" in recent years to mean any sort of automation has cast even more doubt on how robotics and AI fit together (more on this at the end of the article).

It's time to put things straight once and for all.

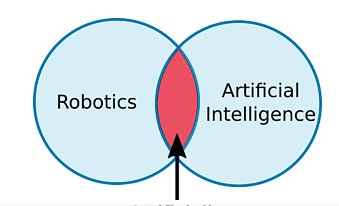

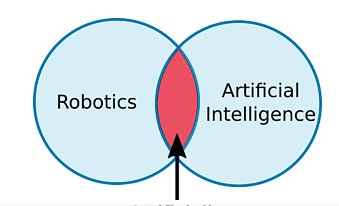

Are robotics and artificial intelligence the same thing?

The first thing to clarify is that robotics and artificial intelligence are not the same things at all. In fact, the two fields are almost entirely separate.A Venn diagram of the two fields would look like this:

As you can see, there is one area small where the two fields overlap: Artificially Intelligent Robots. It is within this overlap that people sometimes confuse the two concepts.

To understand how these three terms relate to each other, let's look at each of them individually.

What is robotics?

Robotics is a branch of technology that deals with physical robots. Robots are programmable machines that are usually able to carry out a series of actions autonomously, or semi-autonomously.

In my opinion, there are three important factors which constitute a robot:

1. Robots interact with the physical world via sensors and actuators.

2. Robots are programmable.

3. Robots are usually autonomous or semi-autonomous.

I say that robots are "usually" autonomous because some robots aren't. Telerobots, for example, are entirely controlled by a human operator but telerobotics is still classed as a branch of robotics. This is one example where the definition of robotics is not very clear.

It is surprisingly difficult to get experts to agree on exactly what constitutes a "robot." Some people say that a robot must be able to "think" and make decisions. However, there is no standard definition of "robot thinking." Requiring a robot to "think" suggests that it has some level of artificial intelligence but the many non-intelligent robots that exist show that thinking cannot be a requirement for a robot.

However you choose to define a robot, robotics involves designing, building and programming physical robots which are able to interact with the physical world. Only a small part of robotics involves artificial intelligence.

Example of a robot: Basic cobot

A simple collaborative robot (cobot) is a perfect example of a non-intelligent robot.For example, you can easily program a cobot to pick up an object and place it elsewhere. The cobot will then continue to pick and place objects in exactly the same way until you turn it off. This is an autonomous function because the robot does not require any human input after it has been programmed. The task does not require any intelligence because the cobot will never change what it is doing.

Most industrial robots are non-intelligent.

What is artificial intelligence?

Artificial intelligence (AI) is a branch of computer science. It involves developing computer programs to complete tasks that would otherwise require human intelligence. AI algorithms can tackle learning, perception, problem-solving, language-understanding and/or logical reasoning.

AI is used in many ways within the modern world. For example, AI algorithms are used in Google searches, Amazon's recommendation engine, and GPS route finders. Most AI programs are not used to control robots.

Even when AI is used to control robots, the AI algorithms are only part of the larger robotic system, which also includes sensors, actuators, and non-AI programming.

Often — but not always — AI involves some level of machine learning, where an algorithm is "trained" to respond to a particular input in a certain way by using known inputs and outputs.

The key aspect that differentiates AI from more conventional programming is the word "intelligence." Non-AI programs simply carry out a defined sequence of instructions. AI programs mimic some level of human intelligence.

Example of a pure AI: AlphaGo

One of the most common examples of pure AI can be found in games. The classic example of this is chess, where the AI Deep Blue beat world champion, Gary Kasparov, in 1997.

A more recent example is AlphaGo, an AI which beat Lee Sedol the world champion Go player, in 2016. There were no robotic elements to AlphaGo. The playing pieces were moved by a human who watched the robot's moves on a screen.

What are Artificially Intelligent Robots?

Artificially intelligent robots are the bridge between robotics and AI. These are robots that are controlled by AI programs.

Most robots are not artificially intelligent. Up until quite recently, all industrial robots could only be programmed to carry out a repetitive series of movements which, as we have discussed, do not require artificial intelligence. However, non-intelligent robots are quite limited in their functionality.

AI algorithms are necessary when you want to allow the robot to perform more complex tasks.

A warehousing robot might use a path-finding algorithm to navigate around the warehouse. A drone might use autonomous navigation to return home when it is about to run out of battery. A self-driving car might use a combination of AI algorithms to detect and avoid potential hazards on the road. These are all examples of artificially intelligent robots.

Example: Artificially intelligent robot

You could extend the capabilities of a collaborative robot by using AI.

Imagine you wanted to add a camera to your cobot. Robot vision comes under the category of "perception" and usually requires AI algorithms.

Say that you wanted the cobot to detect the object it was picking up and place it in a different location depending on the type of object. This would involve training a specialized vision program to recognize the different types of objects. One way to do this is by using an AI algorithm called Template Matching, which we discuss in our article How Template Matching Works in Robot Vision.

In general, most artificially intelligent robots only use AI in one particular aspect of their operation. In our example, AI is only used in object detection. The robot's movements are not really controlled by AI (though the output of the object detector does influence its movements).

Where it all gets confusing…

As you can see, robotics and artificial intelligence are really two separate things.

Robotics involves building robots physical whereas AI involves programming intelligence.

However, there is one area where everything has got rather confusing since I first wrote this article: software robots.

Why software robots are not robots

The term "software robot" refers to a type of computer program which autonomously operates to complete a virtual task. Examples include:

Search engine "bots" — aka "web crawlers." These roam the internet, scanning websites and categorizing them for search.

Robotic Process Automation (RPA) — These have somewhat hijacked the word "robot" in the past few years, as I explained in this article.

Chatbots — These are the programs that pop up on websites talk to you with a set of pre-written responses.

Software bots are not physical robots they only exist within a computer. Therefore, they are not real robots.

Some advanced software robots may even include AI algorithms. However, software robots are not part of robotics.